100G optics are hitting the market en masse and 400G is expected sometime next year. Nevertheless, data traffic continues to increase and the pressure on data centers is only ramping up.

100G optics are hitting the market en masse and 400G is expected sometime next year. Nevertheless, data traffic continues to increase and the pressure on data centers is only ramping up.

Balancing the three-legged table

In the data center, capacity is a matter of checks and balances among servers, switches and connectivity. Each pushes the other to be faster and less expensive. For years, switch technology was the primary driver. With the introduction of Broadcom’s StrataXGS® Tomahawk® 3, data center managers can now boost switching and routing speeds to 12.8 Tbps and reduce their cost per port by 75 percent. So, the limiting factor now is the CPU, right? Wrong. Earlier this year, NVIDIA introduced its new Ampere chip for servers. It turns out the processors used in gaming are perfect for handling the training and inference-based processing needed for artificial intelligence (AI) and machine learning (ML).

CLICK TO TWEET: CommScope’s Jim Young explains that 400G is coming to data centers soon, but we need to prepare for 800G in a couple of years.

The bottleneck shifts to the network

With switches and servers on schedule to support 400G and 800G, the pressure shifts to the physical layer to keep the network balanced. IEEE 802.3bs, approved in 2017, paved the way for 200G and 400G Ethernet. However, the IEEE has only recently completed its bandwidth assessment regarding 800G and beyond. Given the time required to develop and adopt new standards, we may already be falling behind. So, cabling and optics OEMs are pressing ahead to keep momentum going as the industry looks to support the ongoing transitions from 400G to 800G, 1.2 Tb and beyond. Here are some of the trends and developments we’re seeing.

Switches on the move

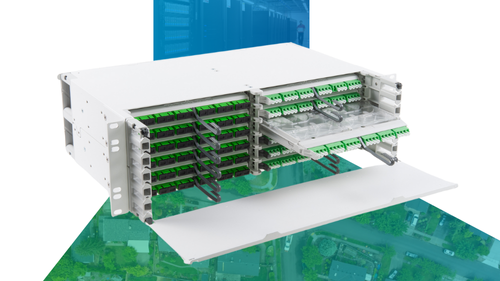

For starters, server-row configurations and cabling architectures are evolving. Aggregating switches are moving from the top of the rack (TOR) to the middle of the row (MOR), and connecting to the switch fabric through a structured cabling patch panel. Now, migrating to higher speeds involves simply replacing the server patch cables instead of replacing the longer switch-to-switch links. This design also eliminates the need to install and manage 192 active optical cables (AOCs) between the switch and servers.

Transceiver form factors changing

New designs in pluggable optic modules are giving network designers additional tools, led by 400G-enabling QSFP-DD and OSFP. Both form factors feature 8x lanes, with the optics providing eight 50G PAM4. When deployed in a 32-port configuration, the QSFP-DD and OSFP modules enable 12.8 Tbps in a 1RU device. The OSFP and the QSFP-DD form factor support the current 400G optic modules and next-generation 800G optics modules. Using 800G optics, switches will achieve 25.6 Tbps per 1U.

New 400GBASE standards

There are also more connector options to support 400G short-reach MMF modules. The 400GBASE-SR8 standard allows for a 24-fiber MPO connector (favored for legacy applications) or a single-row 16-fiber MPO connector. The early favorite for cloud scale server connectivity is the single-row MPO16. Another option, 400GBASE-SR4.2, uses a single-row MPO 12 with bidirectional signaling—making it useful for switch-to-switch connections. IEEE802.3 400GbaseSR4.2 is the first IEEE standard to utilize bidirectional signaling on MMF, and it introduces OM5 multimode cabling. OM5 fiber extends the multi-wavelength support for applications like BiDi, giving network designers 50 percent more distance than with OM4.

But are we going fast enough?

Industry projections forecast that 800G optics will be needed within the next two years. So, in September 2019, an 800G pluggable MSA was formed to develop new applications, including a low-cost 8x100G SR multimode module for 60- to 100-meter spans. The goal is to deliver an early-market low-cost 800G SR8 solution that would enable data centers to support low-cost server applications. The 800G pluggable would support increasing switch radix and decreasing per-rack server counts.

Meanwhile, the IEEE 802.3db task force is working on low-cost VCSEL solutions for 100G/wavelength and has demonstrated the feasibility of reaching 100 meters over OM4 MMF. If successful, this work could transform server connections from in-rack DAC to MOR/EOR high-radix switches. It would offer low-cost optical connectivity and extend long-term application support for legacy MMF cabling.

So, where are we?

Things are moving fast, and—spoiler alert—they’re about to get much faster. The good news is that, between the standards bodies and the industry, significant and promising developments are underway that could get data centers to 400G and 800G. Clearing the technological hurdles is only half the challenge, however. The other is timing. With refresh cycles running every two to three years and new technologies coming online at an accelerating rate, it becomes more difficult for operators to time their transitions properly—and more expensive if they fail to get it right.

There are lots of moving pieces. A technology partner like CommScope can help you navigate the changing terrain and make the decisions that are in your best long-term interest.